Month: July 2017

-

Process: Puzzler 👨💻

Over the past few months I’ve been studying with Udacity to learn VR software development. The course has been great so far, so I thought I’d share a little of what I’ve been up to with a game called “Puzzler”. About Puzzler Puzzler is a simple VR experience for Google cardboard. Basically anyone with a…

-

Augmented Reality’s A-ha Moment 🎤

Everyone is using the headline, but it’s so good I had to repeat it. Such a great example of ARkit in action. Apple’s really on to a winner. Here’s context for the very young.

-

Hardware for VR development 🖥

Starting out in VR development it’s easy to think you’ll spend the earth on special hardware to get going. The reality is that’s just not true. The below 360 images (which I took with the google street view app on iOS) is fairly rough as 360 images go. The room was a mess as I…

-

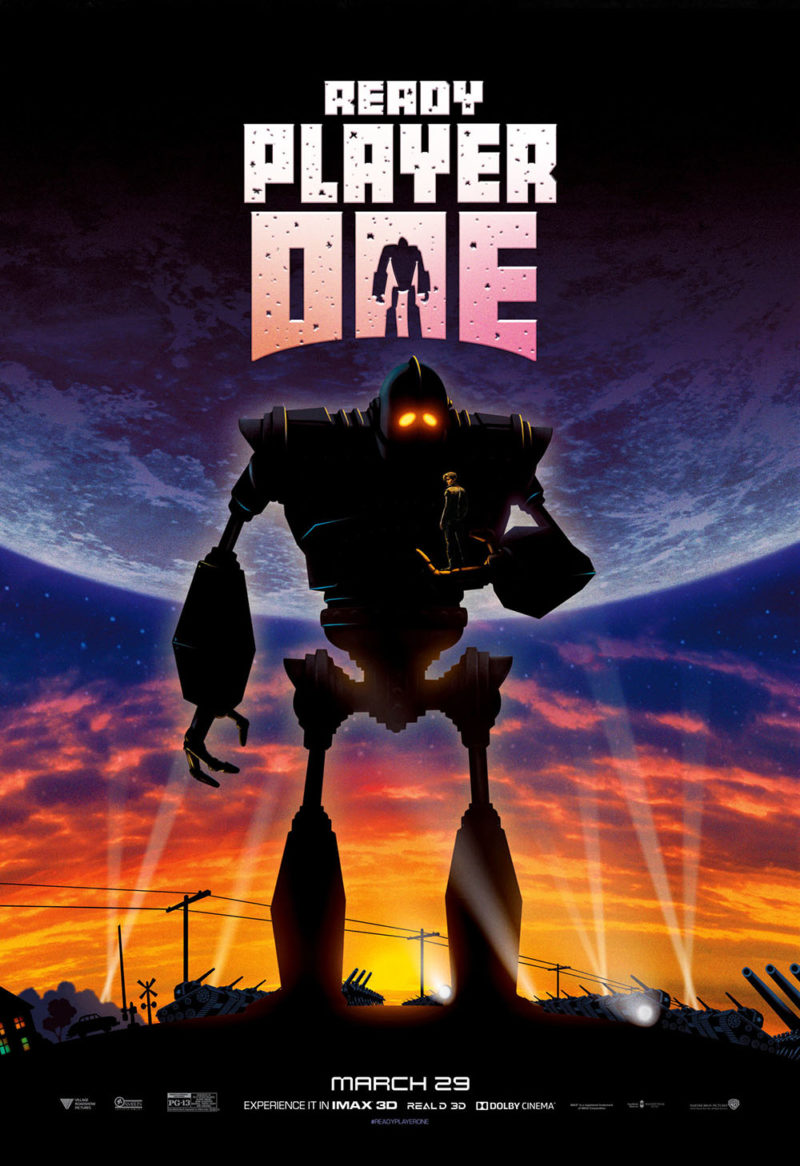

Ready player one 🕹

I’m 6 chapters into the book and suddenly there’s a movie trailer. Awesome!

-

$99 AR headset 😍

I seriously doubt the stock like silliness in this promo video is even remotely connected to reality… but $99USD AR headset! Sign me up!

-

Locomotion 🚂

You know what’s really fun? Being able to move around and interact in a virtual environment. You know what isn’t? Barfing all over your new all birds. That’s exactly what’s at stake when designing a good VR experience, particularly one that includes movement. When developing for VR it’s important to build a good understanding of…

-

VR and the power of scale 💗

One of the most powerful things about VR is its ability to have a shared experience at scale. From an education perspective, this is an extremely exciting thing. The below TED talk is a fantastic example of how VR can bring multi-million dollar facilities to every student without the cost of traditional real-world labs. Not…

-

Process: User experience testing 🔬

As I said in my previous post on process, it’s important to test your work early and often. This means getting in front of your users and collecting feedback, aka UX testing. When you’re starting out with user testing it can be quite intimidating, but with a few tips and a bit of preparation, it…

-

Working with GameObjects in Unity 👾

Given I’m currently learning to develop in Unity I thought I’d share a few tips I pick up along the way to becoming proficient with it. When creating a scene in Unity you’ll work with loads of different GameObjects. Your virtual world is built out of them (Floors, walls, trees everything) and you’ll be manipulating…